Reference:

LLVM Atomic Instructions and Concurrency Guide

There are only 2 places need barrier:

- processing invalid queue (RMB)

- write store buffer to cache. (WMB)

Other places, like read pull request, response to read request, mark as share, do NOT participate with barrier!

If using bit fields, this is an important point to note

Though adjacent bit fields are distinct objects, they’re still counted as the same memory location.

The bit fields bf1 and bf2 share a memory location, and the std::string object s

consists of several memory locations internally,

but otherwise each member has its own memory location.

Note how the zero-length bit field marked /*bf3*/

(the name is commented out because zero-length bitfields must be unnamed)

separates bf4 into its own memory location, but doesn't have a memory location itself.

- Every variable is an object, including those that are members of other objects.

- Every object occupies at least one memory location.

- Variables of fundamental type such as int or char are exactly one memory location, whatever their size, even if they’re adjacent or part of an array.

- Adjacent bit fields are part of the same memory location.

Everything hinges on those memory locations. If two threads access separate memory locations, there’s no problem: everything works fine. On the other hand, if two threads access the same memory location, then have to be careful.

Modification orders

class template isn’t just a set of specializations, though.It does have a primary template that can be used to create an atomic variant of a user-defined type.

Because it’s a generic class template, the operations are limited to:

- load()

- store()

- assignment from and conversion to the user-defined type

- exchange()

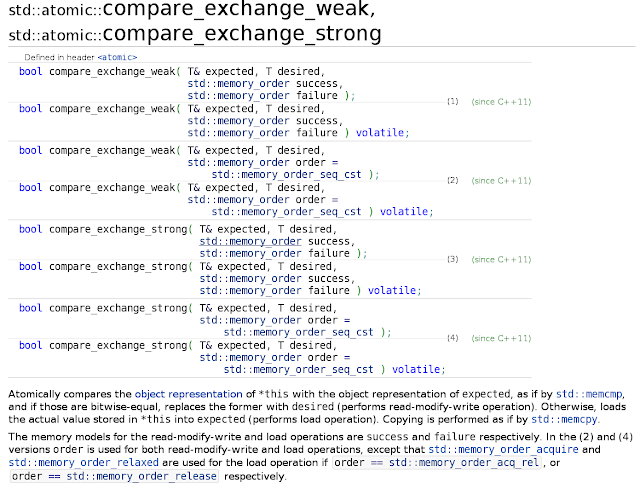

- compare_exchange_weak()

- compare_exchange_strong()

The operations are divided into three categories:

- Store operations, which can have

- memory_order_relaxed,

- memory_order_release, or

- memory_order_seq_cst

ordering - Load operations, which can have

- memory_order_relaxed ,

- memory_order_consume,

- memory_order_acquire, or

- memory_order_seq_cst

- ordering

- Read-modify-write operations, which can have

- memory_order_relaxed,

- memory_order_consume,

- memory_order_acquire ,

- memory_order_release,

- memory_order_acq_rel, or

- memory_order_seq_cst

ordering

Interface:

bool compare_exchange_weak(T& expected, T desired, std::memory_order order = std::memory_order_seq_cst);

This allows for the memory-ordering semantics to differ in the

case of success and failure.

It might be desirable for

a successful call to have:

[memory_order_acq_rel] semantics

whereas a failed call has:

[memory_order_relaxed] semantics.

A failed compare/exchange doesn’t do a **store**,

so it can’t have

[memory_order_release] or [memory_order_acq_rel] semantics.

It’s therefore not permitted to supply these values as the ordering for failure.

You also can’t supply stricter memory ordering for failure than for success;

If you want [memory_order_acquire] or [memory_order_seq_cst]

semantics for failure,you must specify those for success as well.

If you don’t specify an ordering for failure,

it’s assumed to be the same as that for success,

except that the release part of the ordering is stripped:

[memory_order_release] becomes [memory_order_relaxed],

**and [memory_order_acq_rel] becomes [memory_order_acquire].**

(failure是在做load.)

If you specify neither, they default to [memory_order_seq_cst]

as usual, which provides the full sequential ordering for both success and failure.

Following two calls to compare_exchange_weak() are equivalent:

std::atomic<bool> b; bool expected; b.compare_exchange_weak(expected,true, memory_order_acq_rel,memory_order_acquire); b.compare_exchange_weak(expected,true,memory_order_acq_rel);

----------------

Fully specialized std::atomic template:

In order to use std::atomic<UDT> for some user-defined type UDT ,

this UDT type must have a trivial copy-assignment operator.

重點是:

This essentially permits the compiler to use memcpy()

or an equivalent operation for assignment operations,

because there’s no user-written code to run.

The type must be bitwise equality comparable.

Not only must you be able to copy an object of type UDT using

memcpy(), but you must be able to compare instances for equality using memcmp().

This guarantee is required in order for compare/exchange operations to work.

In general, the compiler isn’t going to be able to generate lock-free code

for std::atomic<UDT>, so it will have to use an internal lock for all the operations.

These restrictions increase the chance that the compiler will be able to make use of atomic instructions directly for std::atomic<UDT>

(and thus make a particular instantiation lock-free),

because it can just treat the user-defined type as a set of raw bytes.

----------

關於使用float value:

Note that although you can use std::atomic<float> or std::atomic<double>,

because the built-in floating point types do satisfy the criteria for use

with memcpy and memcmp, the behavior may be surprising in the case of

compare_exchange_strong.

The operation may fail even though the old stored value was equal in value to the comparand, if the stored value had a different representation.

Note that there are no atomic arithmetic operations on floating-point values.

You’ll get similar behavior with compare_exchange_strong if you use

std::atomic<> with a user-defined type that has an equality-comparison

operator defined, and that operator differs from the comparison using memcmp—the operation may fail because the otherwise-equal values have a different representation.

If your UDT is the same size as (or smaller than) an int or a void* ,

most common platforms will be able to use atomic instructions for std::atomic<UDT>.

Some platforms will also be able to use atomic instructions for user-defined types that are twice the size of an int or void*.

These platforms are typically those that support a so-called

double-word-compare-and-swap ( DWCAS ) instruction corresponding to the

compare_exchange_xxx functions.

------------

If the operations occur in the same statement, in general there’s no happens-before relationship between them, because they’re unordered.

**

If you make a series of changes to data in a single thread, you need

only one synchronizes-with relationship for the data to be visible to subsequent operations on the thread that executed C.

------------

Although there are six ordering options, they represent three

models:

- sequentially consistent ordering (memory_order_seq_cst)

- acquire-release ordering (memory_order_consume, memory_order_acquire, memory_order_release memory_order_acq_rel)

- relaxed ordering (memory_order_relaxed).

------------

CPUs that use the x86 or x86-64 architectures

(such as the Intel and AMD processors common in desktop PCs)

don’t require any additional instructions for acquire-release ordering beyond those necessary for ensuring atomicity, and even sequentially-consistent ordering doesn’t require any special treatment for load operations, although there’s a small additional cost on stores.

x86CPU会自动处理store顺序,所以smp_wmb()原语什么也

不做,但是load有可能乱序,smp_rmb()和smp_mb()展开为lock;addl。

x86: improved memory barrier implementation

------------

threads don’t have to agree on the order of events.

In the absence of other ordering constraints, the only

requirement is that all threads agree on the modification order of each individual variable.

------------

RELAXED ORDERING:

Operations on atomic types performed with relaxed ordering don’t participate in synchronizes-with relationships.

!!

Operations on the same variable within a single

thread still obey happens-before relationships, but there’s almost no requirement on ordering relative to other threads.

The only requirement is that accesses to a single

atomic variable from the same thread can’t be reordered; once a given thread has seen a particular value of an atomic variable, a subsequent read by that thread can’t retrieve an earlier value of the variable.

1. 同一個thread仍有同一個 variable 的 happen before relationship

2. 同一個thread同一個variable不能被either compiler reordered or memory reordering.

3. 同一個thread但不同variable,compiler仍能做reordering!!

------------

ACQUIRE-RELEASE: (沒有總體的view。每個thread看到的world不一樣。)

因為... acquire/release 為pairwise:

一個thread/cpu core 的 invalid queue 並沒有被來得及update through BUS,

因此根本無從依據invalid queue然後 send out read request to the update CORE。

與sequential consistence不同的是,sequential consistence是等所有CPU core均將此value 放到所有variable shared core的invalid queue之後,才release variable,因此,所有CORE看到的是統一的 state。

---

about SC:

These days, you won’t easily find a modern multicore device which guarantees

sequential consistency at the hardware level.

Sequential consistency only really becomes interesting as

a software memory model, when working in higher level programming languages.

---

No total order of operations.

atomic loads are acquire operations (memory_order_acquire),

atomic stores are release operations (memory_order_release),

atomic read-modify-write operations (such as fetch_add() or exchange()) are either acquire, release, or both (memory_order_acq_rel).

*

Synchronization is pairwise, between the thread that does

the release and the thread that does the acquire.

*

A release operation synchronizes-with an acquire operation that reads the value written.

*

This means that different threads can still

see different orderings, but these orderings are restricted.

This is possible because there’s no happens-before relationship to force an

ordering.

In order to see the benefit of acquire-release ordering, you need to consider two stores from the same thread.

------------

TRANSITIVE SYNCHRONIZATION WITH ACQUIRE-RELEASE ORDERING:

Need at least three threads.

The first thread modifies some shared variables and does a store-release to one of them.

A second thread then reads the variable subject to the store-release with a load-acquire and performs a store-release on a second shared variable.

Finally, a third thread does a load-acquire on that second shared variable. Provided that the load-acquire operations see the values written by the store-release operations to ensure the synchronizes-with relationships, this third thread can read the values of the other variables stored by the first thread, even if the intermediate thread didn’t touch any of them.

------------

DATA DEPENDENCY WITH ACQUIRE-RELEASE ORDERING AND MEMORY_ORDER_CONSUME:

There are two new relations that deal with data dependencies:

1. dependency-ordered-before

2. carries-a-dependency-to

Just like sequenced-before, carries-a-dependency-to applies strictly within a single thread and essentially models the data dependency between operations;

if the result of an operation A is used as an operand for an operation B,

then A carries-a-dependency-to B.

If the result of operation A is a value of a scalar type such as an int,

then the relationship still applies if the result of A is stored in a variable,

and that variable is then used as an operand for operation B.

This operation is also transitive, so if A carries-a-dependency-to B,

and B carries-a-dependency-to C, then A carries-a-dependency-to C.

On the other hand, the dependency-ordered-before relationship can apply

between threads. It’s introduced by using atomic load operations tagged with

memory_order_consume.

This is a special case of memory_order_acquire that limits the synchronized data to direct dependencies;

a store operation A tagged with memory_order_release, memory_order_acq_rel, or memory_order_seq_cst is dependency-ordered-before a load operation B tagged with memory_order_consume if the consume reads the value stored.

This is as opposed to the synchronizes-with relationship you get if the load uses memory_order_acquire.

If this operation B then carries-a-dependency-to some operation C, then A is also dependency-ordered-before C.

------------

Release sequences and synchronizes-with:

If the store is tagged with

memory_order_release,

memory_order_acq_rel,

or memory_order_seq_cst,

and the load is tagged with

memory_order_consume,

memory_order_acquire,

or memory_order_seq_cst,

and each operation in the chain loads the value written by the previous operation,

then the chain of operations constitutes a release sequence and the initial store synchronizes-with

(for memory_order_acquire or memory_order_seq_cst) or is dependency-ordered-before (for memory_order_consume) the final load.

Any atomic read-modify-write operations in the chain can have any memory

ordering (even memory_order_relaxed).

-------------

subsequent memory operations to be advanced before it.

Conversely, an operation with release semantics is one which does not permit preceding memory operations to be delayed past it.

In the language of C++11, only a store can be a release operation, and only a load can be an acquire operation.

The release operation on the left,

m_instance.store(tmp,std::memory_order_release) ,

actually places fewer memory ordering constraints on neighboring operations than the release fence on the right.

A release operation (such as the one on the left) only needs to prevent preceding memory operations from being reordered past itself.

But a release fence (such as the one on the right) must prevent preceding memory operations from being reordered past all subsequent writes.

Because of this difference, a release operation can never take the place of a release fence.

Consider what happens when we take the code listing on the

right, and replace the release fence with a release operation on a separate atomic variable, g_dummy :

Singleton* tmp = new Singleton; g_dummy.store(0, std::memory_order_release); m_instance.store(tmp, std::memory_order_relaxed);

The store to m_instance is now free to be reordered before the store to g_dummy , and possibly before any stores performed by the Singleton constructor.

----------------

Load-link/store-conditional

Non-blocking_algorithm

Memory_barrier

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.