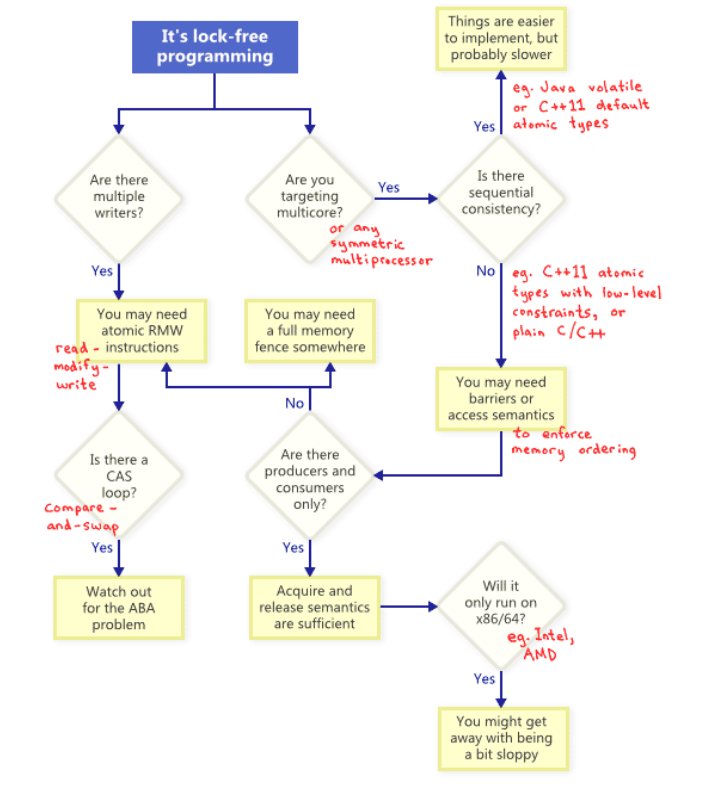

figure from : An Introduction to LockFree Programming

- Lock-free programming is hard, be sure you need it

- Never guess about performance

- Lock-free programming is mostly about memory order

- Know potential dangers of locks and how to avoid them

- Design lock-free data structures, not algorithm.

- Avoid writing generic lock-free code

- Minimize data sharing, know exactly what is shared

- Design in terms of atomic transactions

- Lock-free data structures usually need special interfaces

- Take every advantage of practical limitations

- Use DCLP to handle special cases

(from book: The Art of Multiprocessor Programming)

- Wait-free programs: each thread will complete its task in finite number of steps (finite amount of time) no matter what other threads are doing

— At all times, all threads make progress toward the final result - Lock-free programs: programs without locks (duh!); at least one thread makes progress no matter what other threads are doing

- Obstruction-free program: thread makes progress as long as no other thread accesses shared data at the same time

- Lock-based programs are not guaranteed to make progress toward the result in all cases

— In particular, consider what would happen if the thread holding the lock was suspended by the OS?

Problems With Locks

- (Not a problem) Locks are simpler than any alternative

— Most programs are not lock-free

— Know problems with locks not just to avoid locks but also to avoid - problems when using locks

- Deadlock

- Livelock

- Priority Inversion

- Convoying (lock in same order. Prevent dead lock)

- Reentrance and Signal safety

- Shared memory between processes

- Preemption tolerance

- Performance

- Performance of a well-written lock program may be no different from that of a lock-free program

- Lock-based program ≠ mutex-based program!

- Overhead of data sharing depends on the amount of data

- Mutexes have high overhead – a lot of shared data

- Spinlocks have one shared word

— Plus the shared data they protect - If a lock-free program needs to share several pieces of data to arrange lock-free synchronization, it may end up being slower than a program with spinlocks

Practical problems with locks

- In practice a lot of these problems may not matter

— I write HPC code, I don’t have priority threads, I just want the answer as fast as possible.

— Sure, the thread holding the lock can get suspended, but how often does it really happen?

— If a lock protects an atomic transaction with no user code inside, this cannot deadlock or livelock.

— I usually know what can and cannot be in shared memory - In practice, the main motivation to get rid of locks is performance

— Locks can make your program slow

— Lock-free does not always make it faster - In some special cases lock-free program may be simpler!

In practice, the main motivation to get rid of locks is performance

- Getting rid of locks does not automatically make the program faster

- How to atomically increment two 64-bit integers?

— Some CPUs have 128-bit CAS (DCAS)

— There is a really clever algorithm with CAS and a 3 rd integer

— Do it under a spinlock or use atomic exchange and busy-wait

— On a wide range of x86 systems, even under heavy congestion spinlock is faster than CAS for up to 40-64 cores and always faster than DCAS - The fastest is to not atomically increment two 64-bit integers

(and practical solutions)

- Wait-Free and Lock-Free programs do not suffer from deadlocks, livelocks, priority-inversion or convoying; they can be reentrant and signal-safe; they can support shared memory.

- Lock-Free and Wait-Free algorithms are usually very hard to write, understand, and maintain.

- minimize data sharing at the algorithm level.

- use spinlock (or equivalent) for accessing shared data.

- avoid designing lock-free algorithms, design lock-free data structures, it’s easier and usually is what you want.

- design only what you need, avoid generic designs

— Does not work if you are writing STL

Generic lock-free queue is very hard to write

- Problems:

— Another atomically incremented counter for “front”

— What to do when queue is empty

— What to do when queue runs out of memory - Generic lock-free queue is not always faster than a well-written non-thread-safe queue with a spinlock

Data Sharing is Expensive

- Non-thread-safe data access is faster than synchronized data access.

— Simplest case: non-atomic vs atomic access

— Avoids the unknown overhead of locking - Why is synchronized access more expensive?

- Cache coherency must be maintained in hardware.

- Side note: circuits for caches and their maintenance are the largest part of modern CPUs and the limiting factor in increasing the number of cores.

— Some CPU architectures give up on cache coherency altogether for that reason (Cell processor)

Performance myth:

Comparing general lock-free queue with spinlocked

wrapper around std::queue

- Results are inconsistent, varies a lot between CPU architectures (Haswell, Nehalem, Sandy Bridge) and machine configurations (number of CPUs, number of sockets)

- Results depend strongly on access patterns and congestion

- If there is a trend, it’s slightly in favor of std::queue (on the set of machines I have access to and set of access patterns tested)

- Subtle implementation details matter, such as having top and bottom pointers on separate cache lines

Special-case queues can be much faster :

- Maximum number of elements known in advance

- One producer thread, many consumer threads : 1P:NC

- One consumer thread, many producer threads: NP:1C

- Very different implementations but well worth it

Generic lock-free queue is very hard to write

- Problems:

— Another atomically incremented counter for “front”

— What to do when queue is empty

— What to do when queue runs out of memory - Generic lock-free queue is not always faster than a well-written non-thread-safe queue with a spinlock

- But a specialized lock-free queue often is!

(Exam the interface!)

- Each member function is thread-safe

- A thread-safe data structure can, at most, guarantee that each member function call is a transaction

— Whether lock-free or guarded by a lock - That transaction must be useful to the caller

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.