Reference:

https://www.codeproject.com/Articles/1183423/We-Make-a-std-shared-mutex-10-Times-Faster

https://en.wikipedia.org/wiki/Register_allocation#Spilling

https://en.wikipedia.org/wiki/MESIF_protocol

http://www.open-std.org/jtc1/sc22/wg21/docs/papers/2016/n4606.pdf

https://www.cl.cam.ac.uk/~pes20/cpp/cpp0xmappings.html

Concurrent Data Structures library:

https://github.com/khizmax/libcds

compound operations

i.e RMW(read-modify-write)

The operation a = a+1; consists of at least three mini-operations:

- Load the value of the variable "a" into the CPU register

- Add 1 to the value in the register

- Write the value of the register back into the variable "a"

3 ways to avoid data race

- Use atomic instructions with atomic variables; however, it's difficult to realize complex logic.

- Complex lock-free algorithms for each new container.

- Use locks. Admit 1 thread, one by one, to the locked code, so the problem of data-races does not arise and we can use arbitrary complex logic by using any normal not thread-safe objects.

Difference between std::atomic and volatile in C++11

1. Optimizations

For

std::atomic<T> a;

two optimizations are possible, which are impossible for volatile T a; // always spilled to memory.

std::atomic<T> a;

two optimizations are possible, which are impossible for volatile T a; // always spilled to memory.

Optimization of the fusion:

a = 10; a = 20;

can be replaced by compiler with

a = 20;

Optimization of substitution by a constant:

a = 1; local = a;

can be replaced by the compiler:

a = 1; local = 1;

2. Reordering

std::atomic<T> a;

operations can limit reordering around themselves for operations with the ordinary variables and operations with other atomic variables in accordance with the used memory barrier std::memory_order_...

volatile T a;

does not affect the order of regular variables (non-atomic / non-volatile), but the calls to all volatile variables always preserve a strict mutual order, i.e., the order of execution of any two volatile-operations cannot be changed by the compiler, and can by the CPU.

The compiler cannot reorder operations on volatile variables at compile-time, but the compiler allows the CPU to do this reordering at run-time.

3. Spilling

std::memory_order_release, std::memory_order_acq_rel, std::memory_order_seq_cst memory barriers, which are specified for

std::atomic<T> a;

These barriers upload the regular variables from the CPU registers into the main memory/cache, except when the compiler can guarantee 100% that this local variable cannot be used in other threads.

4. Atomicity / alignment

For

std::atomic<T> a;

other threads see that operation has been performed entirely or not performed at all.

For Integral types T, this is achieved by alignment of the atomic variable location in memory by compiler - at least, the variable is in a single cache line, so that the variable can be changed or loaded by one operation of the CPU.

Conversely, the compiler does not guarantee the alignment of the volatile variables.

Volatile variables are commonly used to access the memory of devices (or in other cases), so an API of the device driver returns a pointer to volatile variables, and this API ensures alignment if necessary.

For Integral types T, this is achieved by alignment of the atomic variable location in memory by compiler - at least, the variable is in a single cache line, so that the variable can be changed or loaded by one operation of the CPU.

Conversely, the compiler does not guarantee the alignment of the volatile variables.

Volatile variables are commonly used to access the memory of devices (or in other cases), so an API of the device driver returns a pointer to volatile variables, and this API ensures alignment if necessary.

5. Atomicity of RMW operations (read-modify-write)

For

std::atomic<T> a;

operations ( ++, --, += , -= , *=, /=, CAS, exchange) are performed atomically,

i.e., if two threads do operation ++a; then the a-variable will always be increased by 2.

i.e., if two threads do operation ++a; then the a-variable will always be increased by 2.

This is achieved by locking cache-line (x86_64) or by marking the cache line on CPUs that support LL/SC(Load-link/store-conditional) (ARM, PowerPC) for the duration of the RMW-operation.

Volatile variables do not ensure atomicity of compound RMW-operations.

There is one general rule for the variables std::atomic and volatile:

each read or write operation always calls the memory/cache, i.e. the values are never cached in the CPU registers.

Any optimizations and any reordering of independent instructions relative to each other done by the compiler or CPU are possible for ordinary variables and objects (non-atomic/non-volatile).

Recall that operations of writing to memory with atomic variables with std::memory_order_release, std::memory_order_acq_rel and std::memory_order_seq_cst memory barriers guarantee spilling (writing to the memory from the registers) of all non-atomic/non-volatile variables, which are in the CPU registers at the moment, at once: https://en.wikipedia.org/wiki/Register_allocation#Spilling

Changing the Order of Instructions Execution

The compiler and processor change the order of instructions to optimize the program and to improve its performance.

- compiler reordering

- x86_64 CPU reordering

Detail depict

Upon initiating the writing to the memory through

mov b[rip], 5

instruction, the following occurs:

First, the value of 5 and the address of b[rip] are placed in the store-buffer(sb) queue, the cache lines containing the address b[rip] in all the CPU cores are expected to be invalidated and a response from them is being waited.

Then CPU-Core-0 sets the “eXclusive” status for the cache line containing b[rip].

Only after that, the actual writing of the value of 5 from the Store-buffer is carried out into this cache line at b[rip].

In order not to wait all this time - immediately after “5” is placed in the Store-Buffer, without waiting for the actual cache entry, we can start execution of the following instructions: reading from the memory or registers operations. (i.e we could read the value directly from store-buffer instead of from cache)

More weak memory barriers, which allow reordering the instructions in the allowed directions. This allows the compiler and CPU to better optimize the code and increase the performance.

Barriers of Reordering of Memory Operations

enum memory_order {

memory_order_relaxed,

memory_order_consume,

memory_order_acquire,

memory_order_release,

memory_order_acq_rel,

memory_order_seq_cst

};Practically will not use memory_order_consume barrier, because in the standard, there are doubts about the practicability of its usage:

(1.3) — memory_order_consume: a load operation performs a consume operation on the affected memory location. [ Note: Prefer memory_order_acquire, which provides stronger guarantees than memory_order_consume. Implementations have found it infeasible to provide performance better than that of memory_order_acquire. Specification revisions are under consideration. — end note ]

we note that memory_order_acq_rel barrier is used only for atomic compound operations of RMW (Read-Modify-Write), such as: compare_exchange_weak()/_strong(), exchange(), fetch_(add, sub, and, or, xor) or their corresponding operators.

The remaining four memory barriers can be used for any operations, except for the following:

"acquire" is not used for store(), and “release” is not used for load(). (i.e acquire means read, store means write)

Requirements

- memory barriers give us what?

- what lock we want to achieve?

First, spinlock, i.e std::mutex

A spinlock means only allows one thread processes at the time.

Such a code area is called the critical section. Inside it, you can use any normal code, including those without std::atomic<>. - Memory barriers prevent the compiler from optimizing the program so that no operation from the critical section goes beyond its limits.

e.g.

The compiler optimizer is not allowed to move instructions from the critical section to the outside:

- No instruction placed after memory_order_acquire can be executed before it.

- No instruction placed before memory_order_release can be executed after it.

Any other changes in the order of execution of independent instructions can be performed by the compiler

(compile-time) or by the CPU (run-time) in order to optimize the performance.

The thread local dependencies are always stored in a way similar to that of single-threaded execution.

i.e

int a = 0

a = 1 + 42; // - 1

int b = a; // - 2

1 and 2 can not been reordered.

To realize locks (mutex, spinlock ...), we should use Acquire-Release semantics.

§ 1.10.1 (3)

… For example, a call that acquires a mutex will perform an acquire operation on the locations comprising the mutex. Correspondingly, a call that releases the same mutex will perform a release operation on those same locations. Informally, performing a release operation on A forces prior side effects on other memory locations to become visible to other threads that later perform a consume or an acquire operation on A.

Acquire-Release Semantic

The main point of the Acquire-Release semantics is that: Thread-2 after performing the flag.load(std::memory_order_acquire) operation should see all the changes to any variables/structures/classes (not even atomic ones) that have been made by Thread-1 before it executed the flag.store(0, std::memory_order_release) operation.

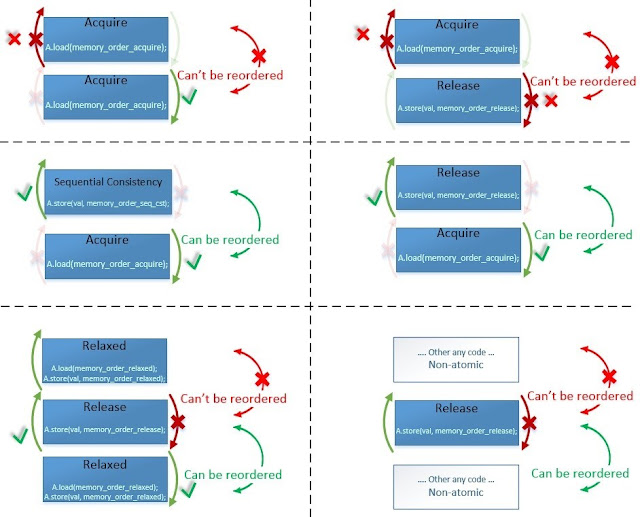

What exactly is the compiler doing in std::memory_order:

1,6: The compiler generates the assembler instructions acquire-barrier for the load operation and the release-barrier for the store operation, if these barriers are necessary for the given CPU architecture

2: The compiler cancels the previous caching of variables in the CPU registers in order to reload the values of these variables changed by another thread - after the load(acquire) operation

5: The compiler saves the values of all variables from the CPU registers to the memory so that they can be seen by other threads, i.e., it executes spilling - up to store(release)

3,4: The compiler prevents the optimizer from changing the order of the instructions in the forbidden directions - indicated by red arrows

With above knowledge, let's coin the spinlock class:

class spinlock_t {

std::atomic_flag lock_flag;

public:

spinlock_t() { lock_flag.clear(); }

bool try_lock() { return !lock_flag.test_and_set(std::memory_order_acquire); }

void lock() { for (size_t i = 0; !try_lock(); ++i)

if (i % 100 == 0) std::this_thread::yield(); }

void unlock() { lock_flag.clear(std::memory_order_release); }

};The following information about details in assembler x86_64, when the compiler cannot interchange the assembler instructions for optimization:

- seq_cst. The main difference (Clang and MSVC) from GCC is when you use the Store operation for the Sequential Consistency semantics, namely:

a.store (val, memory_order_seq_cst);

in this case, Clang and MSVC generate the

[LOCK] XCHG reg, [addr]

instruction, which cleans the CPU store-buffer in the same way as the MFENCE barrier does.

And GCC in this case uses two instructions

MOV [addr], reg and MFENCE - RMW (CAS, ADD…) always seq_cst.

As all atomic RMW (Read-Modify-Write) instructions on x86_64 have the LOCK prefix, which cleans the store-buffer, they all correspond to the Sequential-Consistency semantics at the assembler code level.

Any memory_order for RMW generate an identical code, including memory_order_acq_rel. - LOAD(acquire), STORE(release).

As you can see, on x86_64, the first 4 memory barriers (relaxed, consume, acquire, release) generate an identical assembler code - i.e., x86_64 architecture provides the acquire-release semantics automatically. Besides, it is provided by the MESIF (Intel) / MOESI (AMD) cache coherency protocols.

This is only true for the memory allocated by the usual compiler tools, which is marked by default as Write Back (but it’s not true for the memory marked as Un-cacheable or Write Combined, which is used for work with the Mapped Memory Area from Device - only Acquire- Semantic is automatically provided in it).

Dependent operations cannot ever be reordered anywhere

Read-X – Read-Y

Read-X – Write-Y

Write-X – Write-Y

Acquire-Release vs. Sequential-Consistent total order

There is one more feature of the data exchange between the threads, which is manifested upon interaction of four threads or more. If at least one of the following operations does not use the most stringent barrier memory_order_seq_cst, then different threads can see the same changes in different order. For example:

- If thread-1 changed some value first

- And thread-2 changed some value second

- Then thread-3 can first see the changes made by thread-2, and only after that it will see the changes made by thread-1

- And thread-4, on the contrary, can first see the changes made by thread-1, and only after that, it will see the changes made by thread-2

This is possible because of the hardware features of the cache-coherent protocol and the topology of location of the cores in the CPUs. In this case, some two cores can see the changes made by each other before they see the changes made by other cores. In order that all threads could see the changes in the same order, i.e., they would have a single total order (C++ 11 § 29.3 (3)), it is necessary that all operations (LOAD, STORE, RMW) would be performed with the memory barrier memory_order_seq_cst

Acquire-Release Ordering

Acquire-Release vs. Sequential-Consistency reordering

SC is SLOW.

Generates store-buffer cleaning (MFENCE x86_64) and, at x86_64 level, asm actually correspond to the slowest semantics of the Sequential Consistency.

There is a type of algorithm that is classified as write contention-free - when there is not a single memory cell in which it would be possible to write more than one thread.

In a more general case, there is not a single cache line in which it would be possible to write more than one thread. In order to have our shared-mutex be classified as write contention-free only in the presence of readers, it is necessary that readers do not interfere with each other - i.e., each reader should write a flag (which is read by it) to its own cell and remove the flag in the same cell at the end of reading - without RMW operations.

It is possible that each cell is located in a separate cache line to exclude false-sharing, and it is possible that cells lie tightly - 16 in one cache line - the performance loss will depend on the CPU and the number of threads.

Before setting the flag, the reader checks if there is a writer - i.e., if there is an exclusive lock. And since shared-mutex is used in cases where there are very few writers, then all the used cores can have a copy of this value in their cache-L1 in shared-state (S), where they will receive the value of the writer’s flag for 3 cycles, until it changes.

For all writers, as usually, there is the same flag want_x_lock - it means that there is a writer at the moment. The writer threads set and remove it by using RMW-operations.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.